Motivation and proposed approach

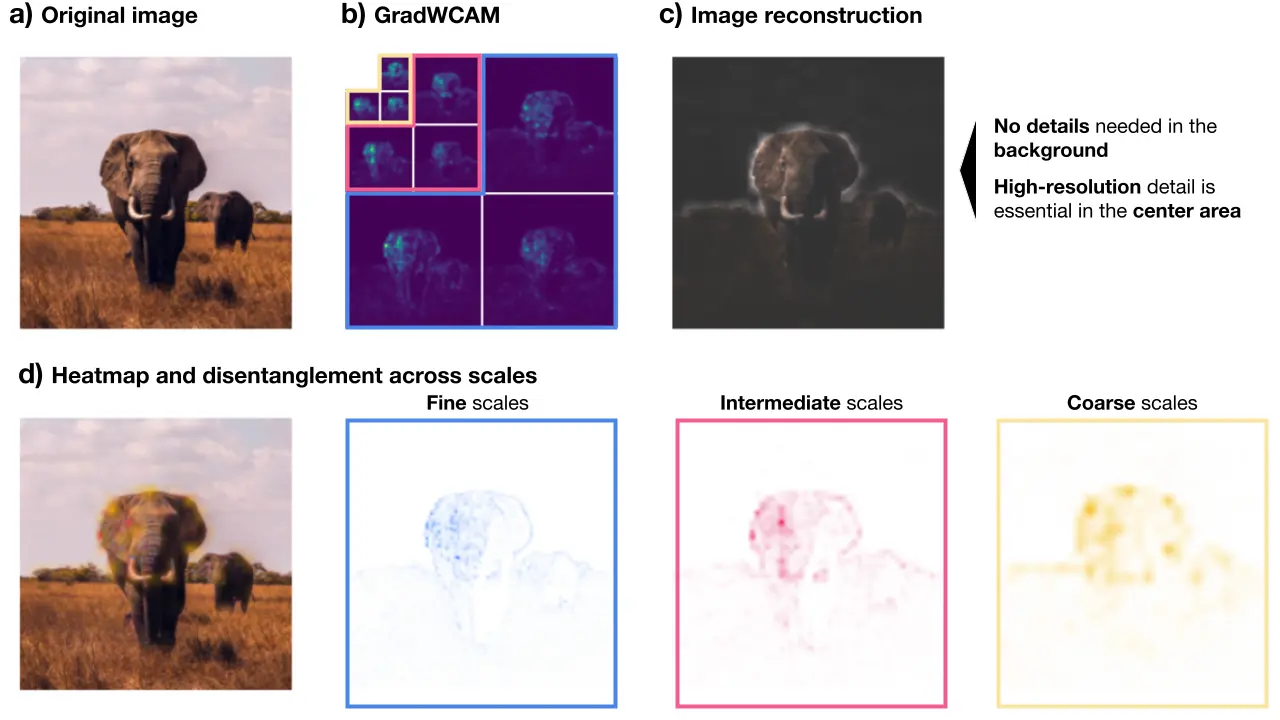

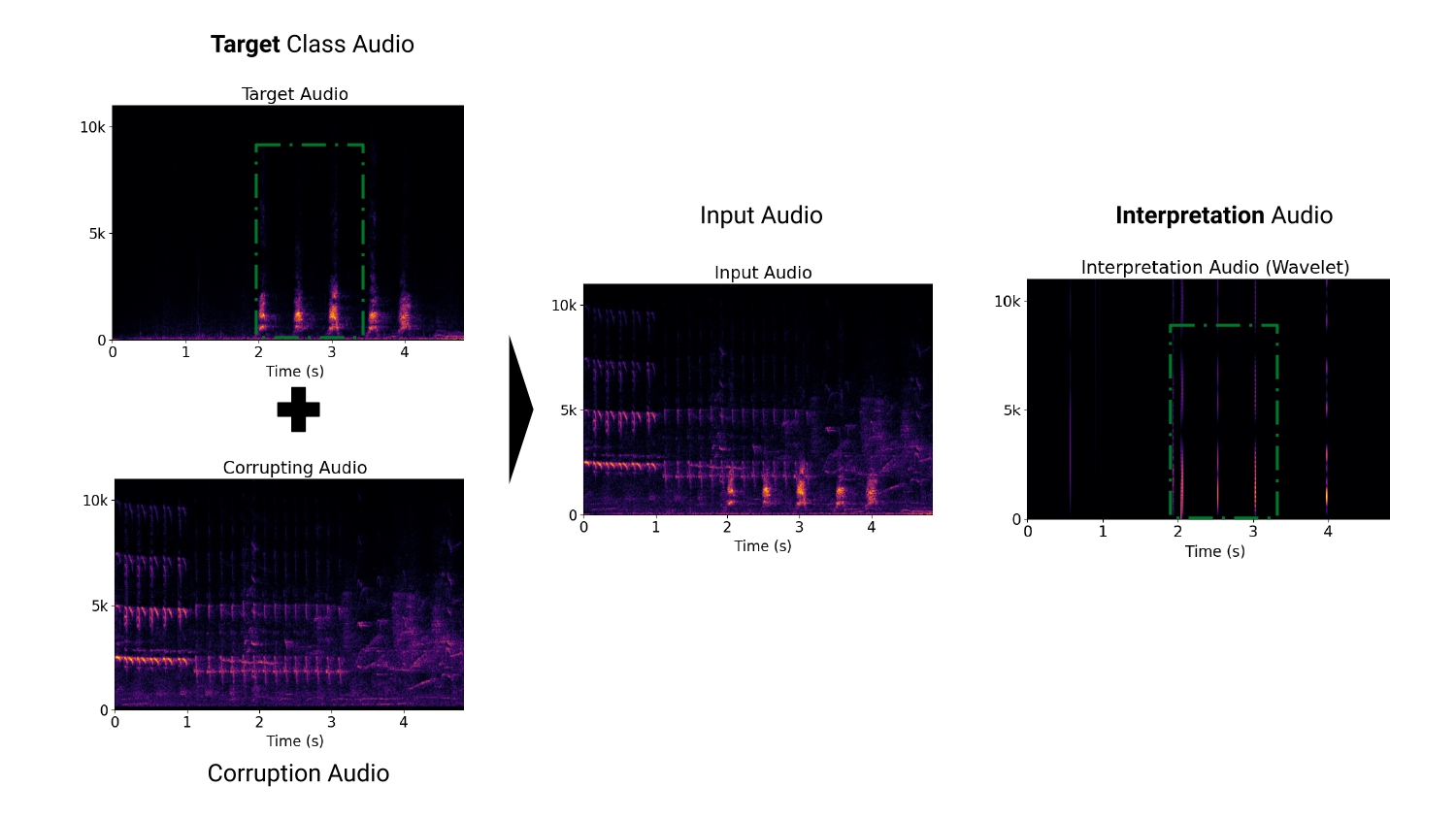

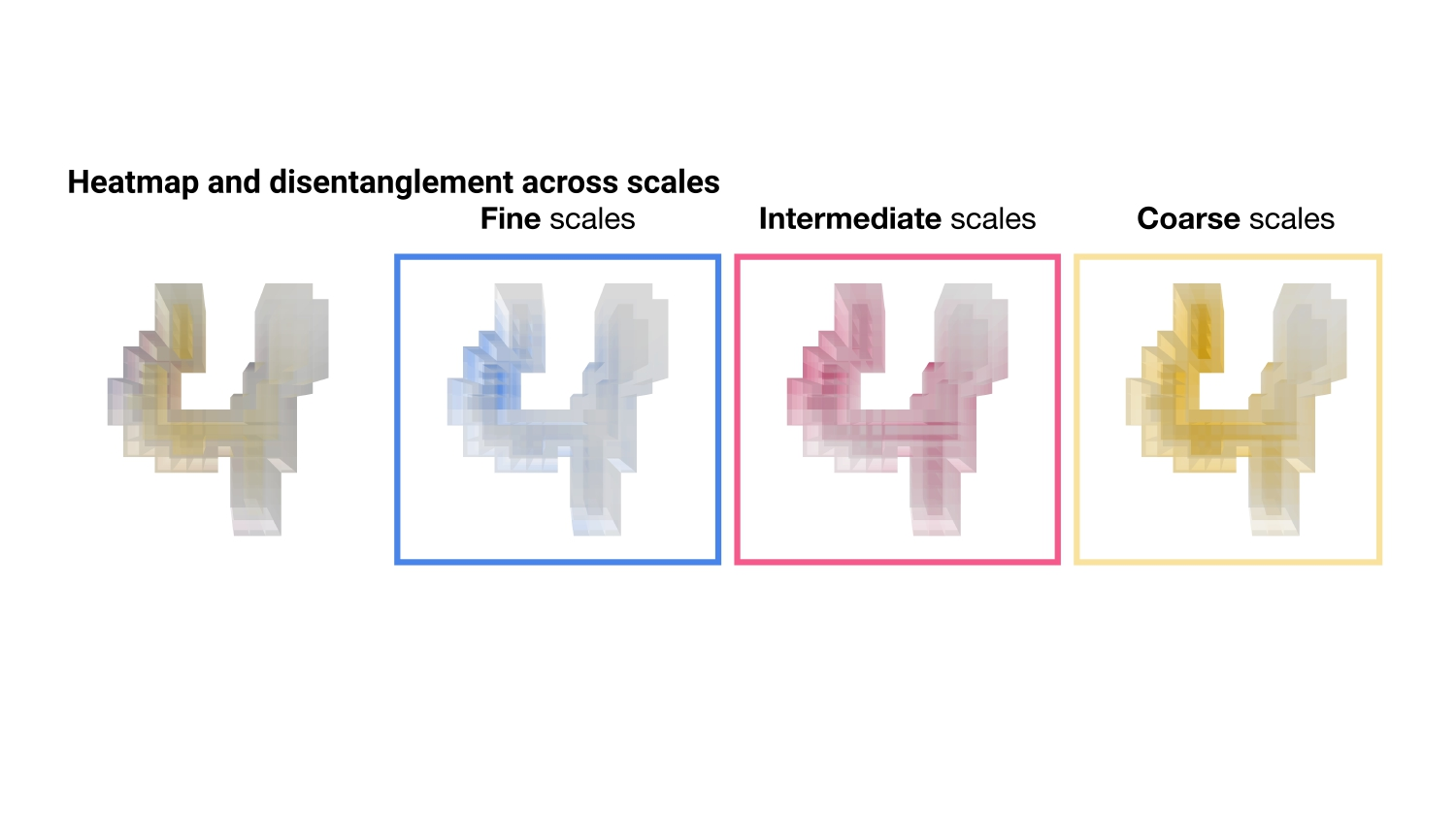

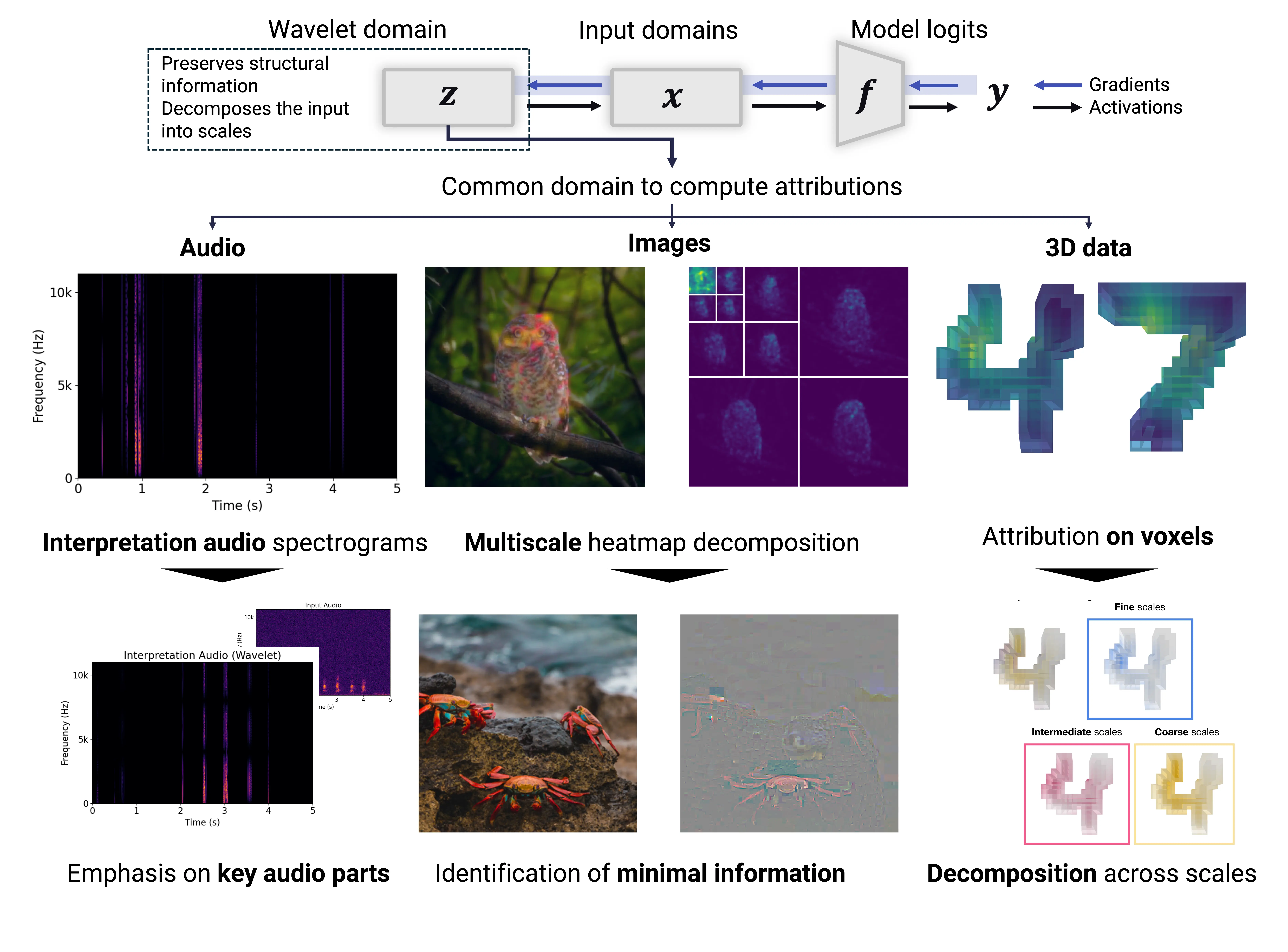

Feature attribution in the wavelet domain. WAM explains any input modality by decomposing the model’s decision in the wavelet domain. It computes the gradient of the model’s prediction with respect to the wavelet coefficients of the input modality (audio, images, volumes). Unlike pixels, wavelet coefficients preserve structural information about the input signal, offering deeper insights into the model’s behavior and going beyond where it focuses.

Explainable AI (XAI) methods, particularly feature attribution methods, are crucial for understanding the decision-making processes of deep neural networks.

Current feature attribution methods rely on a feature space - namely the pixel domain - that overlooks the inherent temporal, spatial, or geometric relationships within the data.

Wavelets offer a hierarchical decomposition that retains both spatial and frequency information, unlike pixel-based methods that lose structural context. This makes wavelets a stronger foundation for interpreting model decisions across diverse modalities (or signals), as wavelets are inherently low-level features defined across various signal dimensions.

Click here to learn more about the method.